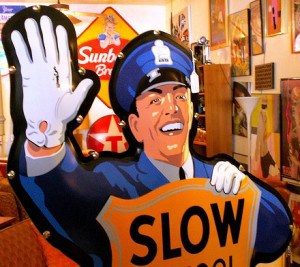

Your Friendly Internet traffic cops Time Warner Cable and Comcast paid for research that suggests those Internet speed slowdowns are your fault (or at least not theirs).

A study from MIT suggests that broadband speed test results that show “real world” broadband speeds far below what your provider promises are actually better than you think, and if they’re not — it’s not your provider’s fault. The paper, Understanding Broadband Speed Measurements, finds slow Internet speeds are often your problem, because you run too many applications on your computer, visit inaccurate speed measurement sites, use a wireless router, or have run into an Internet traffic jam outside of the control of your ISP.

The research comes courtesy of MIT’s Internet Traffic Analysis Study (MITAS) project, financially backed by some of North America’s largest cable and phone companies — Clearwire, Comcast, Liberty Global (Dr. John Malone, CEO), and Time Warner Cable in the United States, Rogers Communications and Telus in Canada. Those providers also deliver much of the broadband speed data MITAS relies on as part of its research. Additional assistance came from MIT’s Communications Futures Program which counts among its members Cisco, an equipment manufacturer and promoter of the “zettabyte” theory of broadband traffic overload and cable giant Comcast.

The study was commissioned to consider whether broadband speed is a suitable metric to determine whether an ISP provides good or bad service to its customers and if speed testing websites accurately depict actual broadband speeds. Because Congress and the Federal Communications Commission have set minimum speed goals and have expressed concerns about providers actually delivering the speeds they promise, the issue of broadband speed is among the top priorities of the FCC’s National Broadband Plan.

“If you are doing measurements, and you want to look at data to support whatever your policy position is, these are the things that you need to be careful of,” Steve Bauer, technical lead on the MIT Analysis Study (MITAS) told TG Daily. “For me, the point of the paper is to improve the understanding of the data that’s informing those processes.”

Bauer’s 39 page study indicts nearly everyone except service providers for underwhelming broadband speeds:

While a principal motivation for many in looking at speed measurements is to assess whether a broadband access ISP is meeting its commitment to provide an advertised data service (e.g. “up to 20 megabits per second”), we conclude that most of the popular speed data sources fail to provide sufficiently accurate data for this purpose. In many cases, the reason a user measures a data rate below the advertised rate is due to bottlenecks on the user-side, at the destination server, or elsewhere in the network (beyond the access ISP’s control). A particularly common non-ISP bottleneck is the receive window (rwnd) advertised by the user’s transport protocol (TCP).

In the NDT dataset we examine later in this paper, 38% of the tests never made use of all the available network capacity.

Other non-ISP bottlenecks also exist that constrain the data rate well below the rate supported by broadband access connections. Local bottlenecks often arise in home wireless networks. The maximum rate of an 802.11b WiFi router (still a very common wireless router) is 11mbps. If wireless signal quality is an issue, the 802.11b router will drop back to 5.5mbps, 2mbps, and then 1 mbps. Newer wireless routers (e.g. 802.11g/n) have higher maximum speeds (e.g. 54 mbps) but will similarly adapt the link speed to improve the signal quality.

End-users also can self-congest when other applications or family members share the broadband connection. Their measured speed will be diminished as the number of competing flows increase.

The study also criticizes the FCC for relying on raw speed data that does not take into account the level of service being chosen by a broadband customer, claiming many service providers actually deliver higher speed service than their “lite” plans advertise.

The study also criticizes the FCC for relying on raw speed data that does not take into account the level of service being chosen by a broadband customer, claiming many service providers actually deliver higher speed service than their “lite” plans advertise.

In short, it’s everyone else’s fault (including yours) for those Internet speed slowdowns.

Ultimately, the report’s conclusion can be summed up in three words: change the subject. It’s not slow broadband speeds that are the problem — it’s the lack of understanding about what you can accomplish with the speeds you do get from your ISP:

In the next few years, as the average speed of broadband increases, and the markets become more sophisticated, we expect that attention may shift towards a more nuanced characterization of what matters for evaluating the quality of broadband services. Issues such as availability (reliability) and latencies to popular content and services may become more important in how services are advertised and measured. We welcome such a more nuanced view and believe it is important even in so far as one’s principal focus is on broadband speeds.

One thing the paper does effectively deliver at top speed are industry talking points, particularly the one that says less regulation is better (underlining ours):

Our hope is that progress may be made via a market-mediated process that engages users, academics, the technical standards community, ISPs, and policymakers in an open debate; one that will not require strong regulatory mandates. Market efficiency and competition will be best served if there is more and better understood data available on broadband speeds and other performance metrics of merit (e.g., pricing, availability, and other technical characteristics).

These kinds of research reports are often tainted by the industry money that pays for them. Researchers and universities routinely deliver industry-pleasing, sober-sounding studies in return for considerable financial contributions, grants, and other forms of underwriting. This report lacks full disclosure about who is helping to pay for it — North America’s largest cable operators, who also deliver much of the data MITAS relies on for their research.

Ask yourself how much longer these companies would be writing checks to MIT had they delivered a report implicating them in false advertising of speeds they do not deliver or for relying on inadequate upstream providers to handle their Internet traffic? The report pulls any and all punches delivered to the companies who finance it — a clear sign of bought-and-paid-for research in action.

Subscribe

Subscribe

I fully agree with this study: a big cause of apparently slow broadband speed is the local computers, poor routers and bad wireless configurations. For example, 2.4 GHz 802.11b/g is affected by wireless phones and microwave ovens. But I also agree with Phil that the ISPs will use this as a distraction to divert attention from their service and price issues. My biggest criticism of Comcast was when they enforced a bandwidth cap with no metering tools. Imagine if your electric power usage was metered but you didn’t have any way of auditing the meter. It would be nice for… Read more »

It seems to me that there are indeed factors outside of the ISP’s control. That’s not to say they get a free pass, but there should be a factor of consideration for lackluster speeds/performance outside of the ISP’s realm of influence. What the ISP should be held accountable for: * Access speed as promised in the agreement when traversing the ISP’s network * Performance of ISP-provided hardware * Reliability of ISP’s gateway servers that provide customer access to the Internet * (arguably) Reliability of the ISP’s DNS servers What the ISP cannot reasonably be held accountable for: * Performance of… Read more »

Talk about a waste of time and money for this so called study. The only people that really have an issue with their broadband speed are those in underserved rural areas where they have sub 3mbit’s connections and are being passed by the major cities as they get upgraded to 50 & 100mbit service. If you’ve already got 50mbit server, having a a few percent of that lost to overhead, or contrained by limited bandwidth on your destination web site isn’t a big concern. Any normal person will downgrade their internet plan to whatever can be used optimally as hi-speed… Read more »

None of this explains why we compare so poorly to other countries. Unless they have special routers outside the U.S. that measure speeds directly at the source, then the speed comparisons to other countries are valid. If the measurement technique is consistent across all countries, then our crappy ranking is legit even if the measurement technique is imperfect.

“None of this explains why we [the United States] compare so poorly to other countries.” – Excellent point!

Actually, in many cases fiber ISPs in other countries have very little infrastructure to maintain in terms of fiber miles per customer, they have their own speed test servers that people test from (this is a fact…look at the sponsors for speedtest.net in those countries) and they use peering extensively to get trafic between point A and point B within the country. In many cases these providers offer significantly lower speeds to destinations outside the country, or outside their ISP. Granted, many ISPs in the US operate their own speedtest.net nodes at this point, however most have enough bandwidth to… Read more »

I used to pay for the premium “Turbo” tier of TWC’s RoadRunner cable Internet for two reasons: 1. The upstream bandwidth was 1Mbps vs. 384Kbps, and 2. I was serving a TeamSpeak server for my friends and myself as well as being the hosting server for some games we played. Eventually RR started packet shaping their network and even premium customers were impacted (why am I being packet shaped if I’m paying a premium?) and a little later on they changed the standard RR upstream to 1Mbps anyway thus obviating the point of the premium service. It didn’t help that… Read more »